Should we use logging in Test Automation Code? Everything that we do in software development is a choice that either helps us or hurts us (The Valley of Success by Titus Fortner). Furthermore, we must always consider the cost of maintenance in everything that we do as the cost of maintenance far outweighs the cost of implementation.

Creating software that’s easier to maintain is almost always better. Keep this in mind as this is our guiding principle for everything that we do in this course.

Our team of Solutions Architects have likely written over 25 of our own frameworks and have analyzed the source code of 100s of frameworks from literally all over the world. We’ve seen solutions with logging and without logging.

I believe that automation code without logging is more stable and easier to maintain. Why?

Because with the correct implementation and tech stack, for instance, we can get:

- Rich logs;

- Videos;

- Screenshots for basically zero implementation effort.

Programming language commands

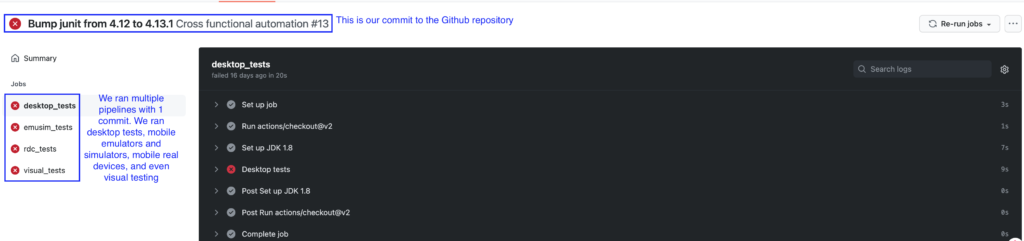

If we assume that in the 21st century all test automation code runs through a CICD pipeline. Then the correct tool will automatically log relevant information for us, with zero extra effort. For instance, check out the logs from Github Workflows in this automation:

These logs are 1000s of lines long and show everything that we need to know what happened and why.

You can learn how to do this in the Continuous Integration section of our Complete Selenium WebDriver with Java Bootcamp course.

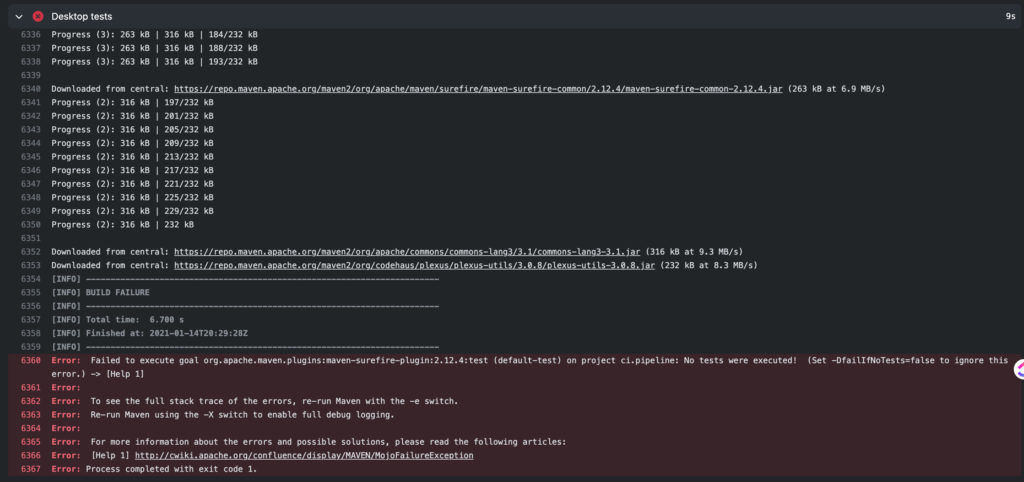

Here is the failure logs from the Desktop tests. Are you able to determine what went wrong and why these tests failed?

As the error states, “there were no tests to execute”. This error reporting simply came from Maven and Github actions displaying these errors, with no extra code.

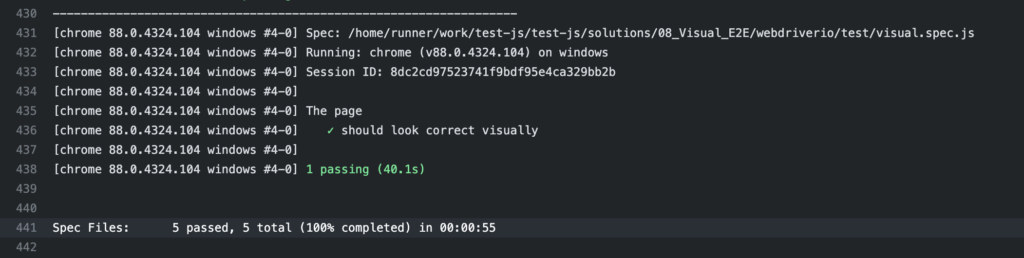

Here’s another example of tests that actually ran and passed:

What about reports for managers?

What about sending HTML reports to managers or team members or developers?

Try to start without this extra burden of code and maintenance and see if anyone actually cares that such reports are missing. Our bet is that what managers and team members really care about is the quality of the software. From speaking to engineers all over the world, we find that nobody actually does anything with these reports, besides generating them. Hence, if we have a reliable signal coming from Github Actions that tells us the quality of the software, then that will be sufficient.

The Selenium WebDriver commands

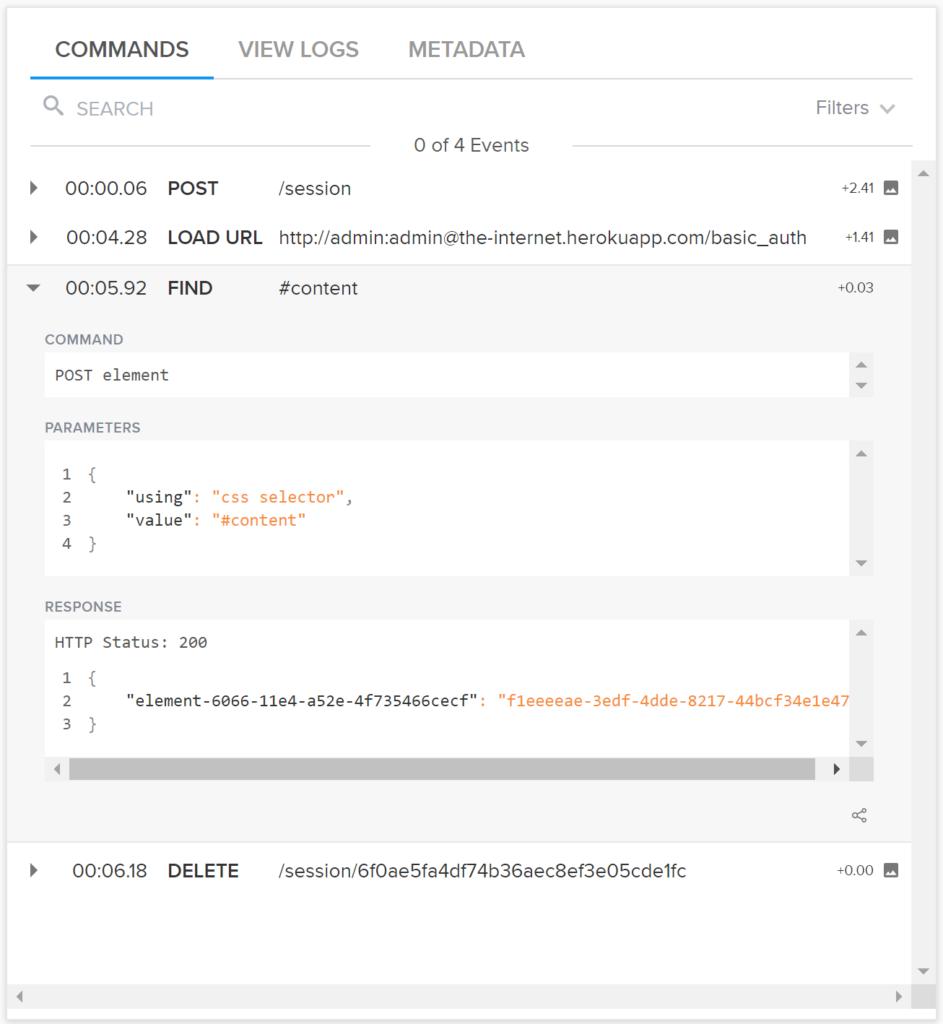

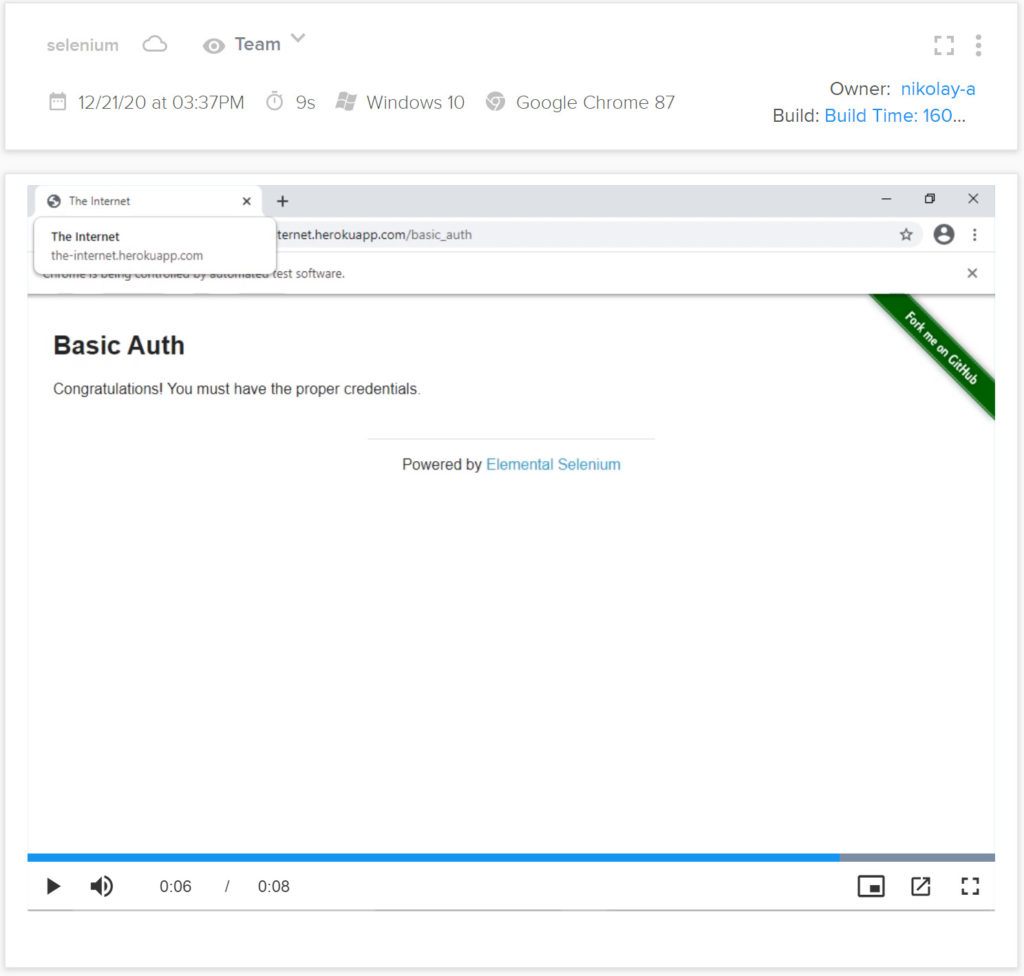

The CI systems can’t show WebDriver commands by default as the CI systems are not configured to read this information. However, gathering and displaying this information is extremely easy using a Selenium Grid through a RemoteWebDriver().

The easiest, most cost-effective, and lowest maintenance way to access log information from WebDriver is to purchase a Grid as a Service. Offering like Sauce Labs, Browser Stack and so on. These services will automatically provide you all of the relevant information simply by using their service like this:

// Running a test in Sauce Labs

SauceSession session = new SauceSession();

WebDriver driver = session.start();

driver.get("www.ultimateqa.com");

What’s the output of this implementation?

Selenium command logs

Videos and screenshots

By the way, you can learn how to do all this in the Continuous Integration portion of the course.

What else do we need?

So far we learned that with almost zero maintenance cost we can have rich logs from our programming language, failure output, Selenium commands, videos and screenshots. Hence, do we actually need to take extra time to learn how to use a logging framework, implement it, and then maintain it?

Nopes 🙅🙅

Conclusion

In conclusion, the best software programs are those that are the easiest to maintain. We can achieve fantastic, fully-featured logging with very simple technologies. How? By using a CICD pipeline and a Grid as a Service provider. Or, we can take implementation time to do this ourselves and reinvent the wheel. Furthermore, we will add maintenance costs for this new implementation. We should prefer the former.

But don’t you think that for API automation testing good logging is essential?

I’m not disagreeing, just curious, what kind of logging do you want in API automation?