Automation engineers find ways to optimize their practice and to find the most reliable results. This is the difference between having confidence in a feature launch, going over-deadline and over-budget. As all engineers are aware, there’s a huge difference between manual testing and automated testing.

Even if we had all of the time in the world to manually review every single feature, the sheer drudgery of approaching the volume of testing required would lead us to leave our careers due to burnout, and rather quickly at that.

That being said, it’s important to note that boundless and overloaded automation testing is not always a good thing.

Sometimes, and by that we mean most of the time, less UI automation is actually preferable.

This can run counter to that which seems to feel most rational.

After all, the novice may assume that the more you test something, the odds are that you’ll find bugs and be more confident in its ability.

But software testing is not akin to the safety testing of physical products.

For most software environments, automation is so vast in its data gathering. It takes real specialists to turn that data into actionable reports.

The Observation Effect

However, in the software world, sometimes the ‘observation effect’ can come into play.

While we all understand that avoiding anti-patterns and the momentum behind them can help us avoid digging too deeply into a hole we cannot get out of, sometimes overcompensating for this fact can be an issue.

For instance, companies that commit to thousands of tests within the space of a day may find that through sheer brute force of automation testing, many false positives are found.

In most environments, automation engineers, using platforms such as Selenium, will be able to address a few complex false positives a day, because they seem to need that manual overview.

- If high percentages of failures that can total in the thousands return to you, you can hardly dedicate the manpower to resolving that issue.

- However, even if you could afford that labor and time cost, the effort may be redundant due to the hollow nature of false positives. In effect, substanceless errors would waste your time.

In fact, as per our example:

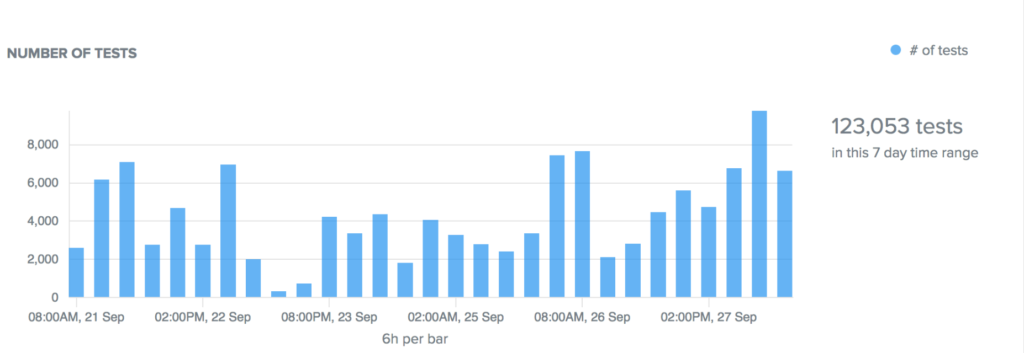

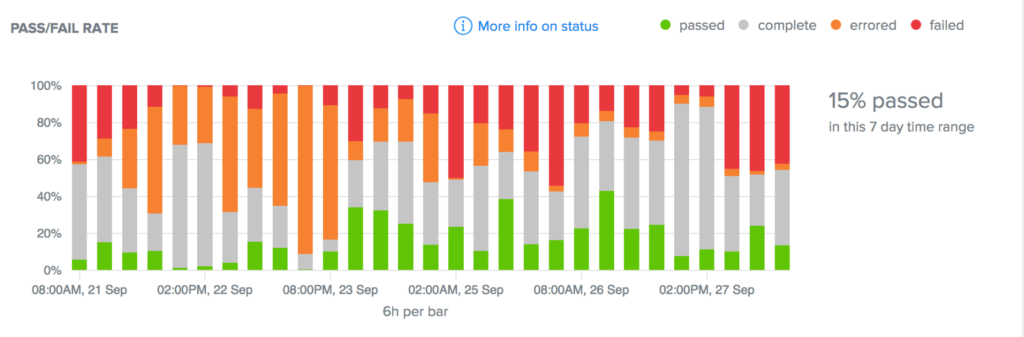

- 123k automated UI tests in 7 days, an arguably overwhelming number, returned only a 15% pass rate.

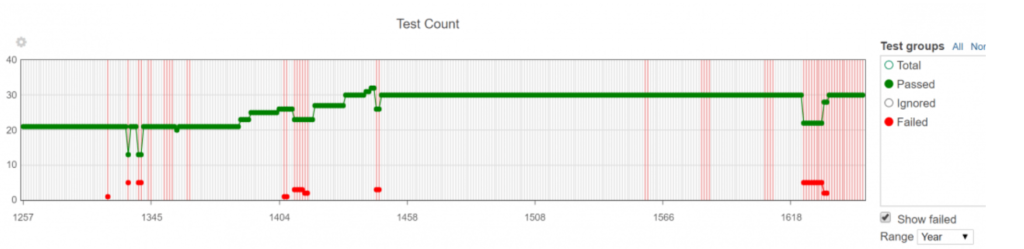

Take a look at this graph and see how only 15% of the tests passed.

That in itself is astonishingly low, and simply does not reflect the integrity of a certain feature.

- To comb through those errors would take years upon years, as over 104,000 bugs were “presumably” present.

So, we need to figure out ways to reduce the false positive rate.

Less UI Automation is The Answer

Again, for those unfamiliar with our trade, it can seem as though testing a finished product less is simply inviting bad luck to come your way, as if by extension you are asking your end users to test and report for you.

Additionally, stretching this testing over a longer amount of time could be considered worthwhile.

As per the graph above, you can see that testing more slowly over the course of one year can help you mimic usage patterns of your actual end users, and also you will find that less false positives are triggered that way.

This means that you now unlock the time and labor costs to actually address the bugs that return to you in testing.

Returning Trust to Automation

It’s true that for the most part, automation has been sullied in reputation. This leads teams to cut corners, avoid actual bugs or lumping them in with other false positives. And it sometimes can even delay production cycles indefinitely.

Being able to trust your automation process means being able to utilize it correctly.

It means being able to carefully mete out your testing framework over a longer period of time. This will ensure that a single feature is better understood. Furthermore the feature will be better relied upon, and more than this, that it mimics the actual behavior of the feature itself.

To use a great example, we know that login pages rarely break for most account access requests.

This is because due to this being one of the most important and prominent (also unavoidable) areas of the user UI your visitors will see, it is thoroughly examined for security and quick access metrics.

That means that after preliminary testing, UI automation, if slowed down or made more infrequent, can actually ensure that the rare testing fails will actually signify real regression. In other words, you find results that are much more reliable.

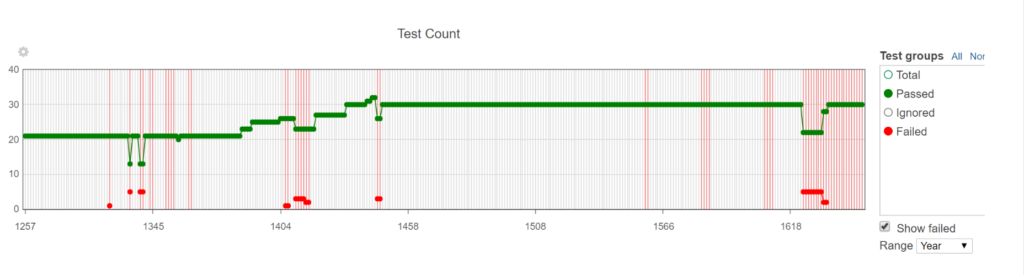

As you can see from the graph above, every failure was a result of a well-flagged, fixable bug. That’s the kind of accuracy we hope to achieve, sometimes even reaching a 99.5% pass rate as evidenced prior.

Simple Advice

In all quality control measures, no matter where and how they are applied, an acceptable limit must be found.

So, instead of brute forcing thousands of tests, we may decide to find a percentage per amount of testing cycles. These cycles should be relevant and acceptable, and also provide us with a more consistent image.

If your UI automation is not giving you a passing and accurate result at least up to 99.5% of the time, then go back to your reliability testing and give automation a break.

This means that as per this example, you should have five false positives out of one thousand test executions.

This can sound like an overly hopeful statistic, but it’s actually not as fantastical as you may imagine.

Is that impossible?

Not at all. I ran the team that had these execution results below…

Automated tests executed over a year

Sadly, I no longer have the exact passing percentage of these metrics.

But if you do an estimation, you’ll see that the pass rate of this graph is high.

You can see the red dots on the graph, which signify a failure. Note one of the long non-failure gaps between build ~1450 and ~1600. That’s ~150 builds of zero failures.

Furthermore, I can say that every failure on this graph was a bug that was introduced into the system.

Not a false positive which is so common in UI automation.

By the way, I’m not saying this to impress you…

Rather, to impress upon you the idea that 99.5% reliability from UI automation is possible and I’ve seen it.

To Conclude

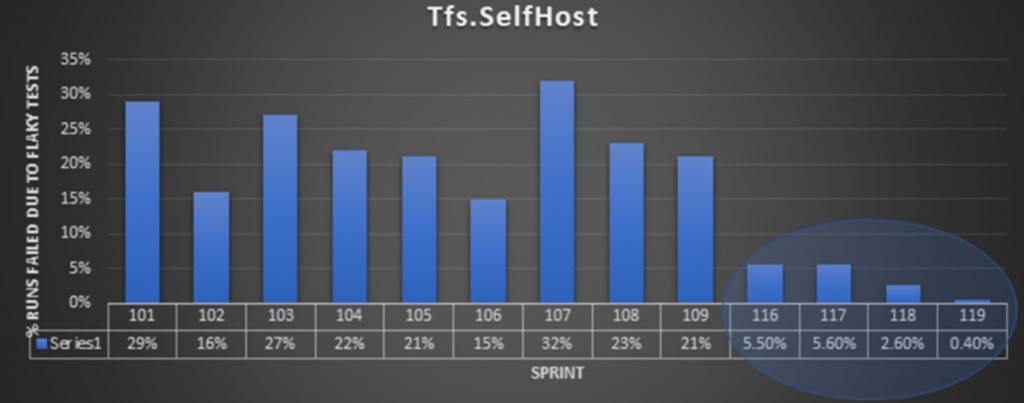

Rely on Automation stability as a tool. Huge software brands such as Microsoft understand the importance of this, as you can see from the included article.

They’re investing two years and thousands of dollars to ensure that stability is increased. As opposed to an overt amount of testing with impressive volume yet inaccurate results.

Follow this example, and the advice above, should help you stay a cut above the rest.