Let’s imagine an application called QTP Tutorial. The development team is going to start work on this application tomorrow. The ultimate goal of the software development process is to deliver the highest quality for QTP Tutorial in the shortest amount of time possible. Seems like a reasonable goal. At the forefront of delivering and ensuring quality is the Quality Assurance team. This team is comprised of two talented QA by the name of Lynda and Joseph. Both will be working on separate components, with the ultimate goal of creating QTP Tutorial.

Lynda has decided to use a recording tool for her automated GUI testing. Armed with the ability to create automated functional tests at the click of a few buttons, Lynda is going to ensure that her components of the application are top notch. She has the desire to automate such a high percentage of her test cases, that all bugs will be caught by her extensive regression suite.

Joseph, on the other hand, is slower and more methodical. He decides that he will build an automated testing framework to help out with testing his components for the QTP Tutorial application. Joseph is going to take his time and write code to create a stable and robust framework. He believes that all of his slow efforts up front will pay off in the long run.

Two very different approaches, one same goal. Which approach is going to create a more stable and higher quality application?

Let’s fast forward 20 weeks…

Record and playback vs automated testing frameworks

The first QA, who shall remain nameless, has accomplished a lot. This QA has 60 automated test cases. 30 of these automated GUI tests are actually functional and running. This automation suite sends out 200 emails per day, notifying the development team of possible application failures. The scary thing is that of these 30 automated test cases that are working, only 10 are actually testing different functionality of her component. Obviously, it’s because the QA needs 3 automated test cases per environment. Otherwise, how will the QA perform regression testing as the new code is being moved from Dev to Test to Production?

What about QA number 2? This QA has managed to automate 126 test cases for their component of QTP Tutorial. 102 of those test cases are functional and running. This automation suite sends out 3 – 10 emails per week, reporting actual application failures to the entire team. Oh, and out of 102 automated test cases, 102 different functionalities are tested. This test suite only needs one test case. This test case can be ran in any environment that the team wants.

WOW! How is it possible that 2 individuals, working on the same application, with the same goal, ended in such different situations? And which automated testing approach is providing a higher return on investment for the employer?

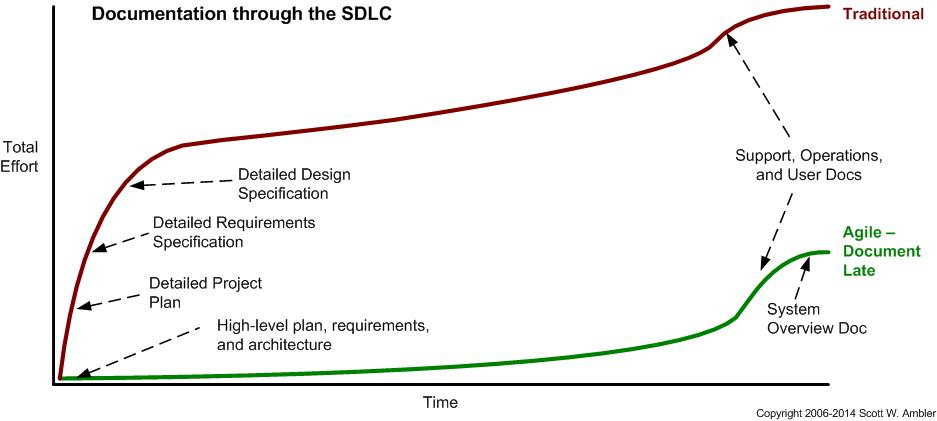

To answer these questions, let’s explore a graph of the Software Development Life Cycle of an application that is thriving and growing, QTP Tutorial.

What does a graph of automated testing look like during software development?

The very first things to notice about this graph are the axis. The y-axis represents a number of something. Just think of it as a count – 1,2,3,4 and so on. In this case, it is a number of features developed, test cases created, recorded GUI tests and automated GUI tests through a testing framework. The x-axis represents the week of the QTP Tutorial project after its inception.

Next, notice the blue line. This line represents the number of features that have been developed and moved to production. A feature is a piece of functionality for the application. In this scenario, the graph shows us that 1 feature per week is developed and moved to the production environment. What’s important is not the number. But the fact that it is a linear curve. This means that QTP Tutorial is always growing and always improving, every single week.

The orange line conveys the total number of test cases that exist for the QTP Tutorial application. What this line shows is that the number of test cases for the application is growing at a much faster rate than the number of features being developed. This should make sense. For every feature developed, there is more than one test case, right? For example, what if we need a login feature? We need to test the boundary conditions of each field, we need to test positive and negative scenarios, performance tests and possibly security testing. So the orange line shows us that as we develop more features for QTP Tutorial, the number of test cases increases at a much faster rate. So at the end of 20 weeks, after 20 features are live, we have a possible 160 test cases for QTP Tutorial.

I hate bugs

Ready for the fun part? The gray line represents the total automated GUI tests created through the use of a Record and Playback tool. This is the work done by Lynda. On week 1, Lynda started strong. She recorded more automated GUI tests than the possible number of test cases.

HUH??? How is that possible?

Well, because Lynda is using a recording tool, she needs to record one automated GUI test for every environment. So since QTP Tutorial moves from Dev to Test to Production, there are 3 environments. So Lynda actually automated 3 test cases out of the possible 8. But she has 9 scripts (3 environments x 3 test cases) because she needs to record one for every environment. This is a CRITICAL point. Because the Recording tool copies the manual test steps, Lynda cannot parameterize the URL. The URL of the application is going to change from “dev.QTPTutorial.net” to “test.QTPTutorial.net” to “www.QTPTutorial.net”. Therefore, because of this single difference in the script, we need 3 files to cover automated testing in all 3 environments.

Anyways, despite this burden, Lynda continues to do an excellent job and she records many automated scenarios for several weeks. But something strange happens during week 8. Lynda’s production is down, is she ill? No, Lynda is fine. But there was a change in the application and the application has become slower as more development was added to it. So Lynda’s automated test cases begin to email the development team. There are over 50 automated test cases screaming “Failure, I cannot find the ‘email’ field, the application is broken”. 200 emails per day are being sent out with these invalid failures. So the team gets angry and they ask Lynda to fix these automated tests because the application works fine. So Lynda spends a ton of her time opening each of the 54 automated test cases so that she could increase the wait time from 5 seconds to 10 seconds. Of course, she will need to retest all of the scripts as well to make sure that they work correctly. At this point, her recording of automated test cases slows down because she needs to take time to maintain the scripts.

After about week 13, Lynda gives up on using her Record and Playback tool because she can’t maintain 60 scripts and provide a quality application. After all, looking at the orange line that represents the total number of test cases, that line is going up pretty fast. So, in order to be able to test the current functionality that is being developed, Lynda needs to perform only manual testing and do regression testing only when she has time. The scary part is that there are more features being developed on a weekly basis. So the gap of regression testing grows larger every week. More bugs start showing up as more of the application is left untested.

Bugs hate Joseph

By now, we all know that Joseph, with his automated testing framework, has helped the company to provide a higher ROI than Lynda. By analyzing the yellow line of the graph, we can see Joseph’s journey through testing QTP Tutorial.

It was hard for Joseph in the beginning. He had to create a framework architecture, code the logic of the framework and design reusable methods. By the end of week 4, Joseph had only 6 out of 36 possible test cases automated. But Joseph knew that this is normal and he explained this to his management.

At about week 6, something magical happened. The framework was actually working and Joseph began to create automated test cases at a much faster rate than ever before. In fact, because he was creating reusable methods, every new automated test case helped to make his framework much more efficient. By the end of week 13, Joseph’s number of automated test cases surpassed those created by Lynda. Furthermore, his 70 automated test cases were actually 70 out of 104 possible test cases. This means that he had almost 70% of the application automated. Because Joseph created methods that take parameters, each of his automated GUI tests could be run in any environment. This makes debugging the automated test cases a breeze.

Remember week 8, where QTP Tutorial became a little slower because the team was adding too much functionality? Well, Joseph’s automated test cases also began to fail and send a lot of false emails. To fix this issue, Joseph had to open 1 file. Inside this file, there was a method called ‘loginToQTPtutorial’. Inside of that method, there was a line of code that said, “Wait for 5 seconds”. Joseph changed this line of code to say, “Wait for 10 seconds”. And because every single one of his 30 test cases was using this method, the change only needed to be made in a single place in order to fix this issue.

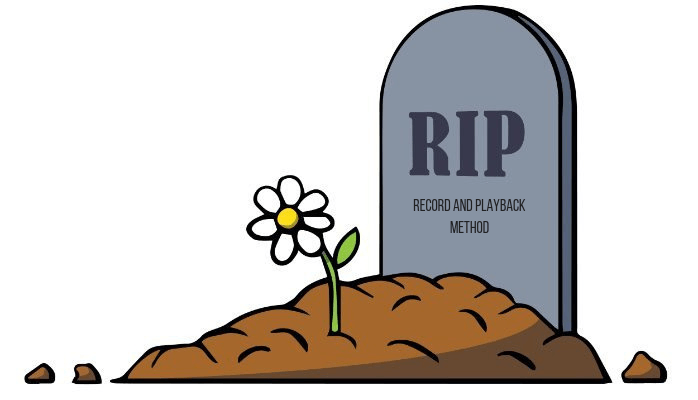

So why is Record and Playback method of automated software testing dying?

The true story above clearly illustrates all of the disadvantages of Record and Playback. Yes, TRUE. I personally was involved in this exact scenario at one time in my career. The names and the numbers are different, but this scenario still happens every single day to many IT companies.

The story about Joseph, Lynda and QTP Tutorial conveys many important points. First and most important is that recorded automated tests are a nightmare to maintain. Applications change all the time and as a result, automated tests will break. Avoiding this fact is like trying to avoid air. That’s why the point is not to avoid it, but to find the best method to deal with the consequences. Imagine poor Lynda who had to update her login functionality in 60 test cases. Joseph just had to make that change in his method, and any test case using that method would automatically be updated.

Second, with an automation framework, every new test case created will help to make the framework more stable and will make future test case creation easier. If for the first test case I have to create a login functionality, I will make that into a method. Now, this method is in my library. For the next test case that involves logging in and filling out a form, I can reuse my ‘login’ method and add a new method to the library to fill out the form. Now I have 2 methods that I never need to recreate. And so, the framework slowly grows to become a stable automated testing machine.

In contrast, a recorded script needs to be re-recorded every time. One script to login. One script to login and fill out a form. Almost the same functionality, two times. Wait! We need to multiply that by 3 because we will need one script for every environment. Now, we have 6 scripts with the same login functionality. To avoid this, I can start passing parameters to my recorded scripts. But now I need to figure out the logic to be able to do this. Seems like I’m making my way towards an automated testing framework if I want to do this…

Conclusion

In conclusion, recorded automated testing is a dying art. Your job as a QA Automation Engineer is to provide your employer with the highest ROI that you can. You cannot accomplish this using a Record and Playback method. Many companies know this and are now looking for specialized SDETs. It’s time that you adapt to the new industry standards. This will help you to have job security and even a higher pay. So why not take the time to learn this skill so that you can be desired by employers?

I am totlally leaving a comment here dude.

I was kicked out as a consultant when I pointed this is out. They just bought 4 license of QTP and what a nightmare of a buggy software.

Mattias, you are joking?? You were kicked out for this? That’s insane! Seems like you don’t want to be at such a place that doesn’t take criticism and doesn’t listen.

I’ve had similar experiences. At one company, they had over 100 recorded tests when I was hired. I recommended starting a whole new suite based on a new framework. This was met with some resistance. I made a deal with tester that had created the tests originally. I told him that I was willing to keep every test he could run against the new QA environment. Not one of the tests could run successfully.

That’s funny! I feel like you did the right thing. It’s always hard to tell someone that they wasted months on work that will be thrown away. Easier to just leave it and slowly let it fizzle away. Which is kind of what happened when they tried to run the tests against a new environment.

hi, i’ve been testing for years, but am new to automation! i want to learn (i’m on my own to do so as there isn’t anyone on my job to help), but sometimes it feels overwhelming even knowing where to start. i’ve considered a course you offer, the complete selenium course. is this truly the place to begin? i know you have a kickstarter project i could support, and thereby, get enrolled in the above. i’ve hesitated because, again, it feels so overwhelming!

Hi Kim,

This is such a common scenario. I was in the same exact place many years ago when I started my transition into test automation. It’s still a hard and overwhelming transition. Let me recommend this article for you. It’s a collection of my favorite resources that I’ve gathered over many years to help with test automation. I use them regularly:

https://ultimateqa.wpmudev.host/best-selenium-webdriver-resources/

In regards to the Kickstarter project, yes, I 100% recommend it for anyone wanting to learn excellent test automation. I’ve been teaching automation for 5 years, taught over 50,000 students and my current course is rated #1 in the world. But I’m going to make it even better in this Kickstarter version.

I’m 100% sure that it will teach you what you need to know to go from beginner to top 90% automation engineer. In my entire career, I’ve never met or interviewed an automation engineer that can do even half of the stuff that I teach in this course. That’s why I’m so confident in the knowledge it provides. I give everyone a 30 day money back guarantee if you are not satisfied. So try it out, I’m sure that you will find everything that you need from the course to get you to an excellent state in test automation!

nickolay, thanks for the reply and the encouragement that others feel this exact way! i am going to jump in and start with your course!